What do I mean by fail? Well, when you run an A/B test, you can get three different results:

So when you work data-driven and continuously test changes, you can expect that about seven out of ten experiments will result in your change making things worse for the user, or simply not making any difference. But that's not bad - it's exactly what you want to achieve. If someone came to me and said they only fail one out of ten tests, I would say they are testing the wrong things. Landing somewhere around 7/10 means you are testing things where there is actually uncertainty about the effect. Moreover, a test is not bad just because it didn't give a positive effect - the only bad experiments are those you don't learn anything from!

Joking aside, constantly glancing at how competitors have solved various problems in their digital channels doesn't align well with a data-driven approach. You don't know if the competitor has tested their way to the solution they have or if they even used data as a basis for their decisions. Basing your improvement suggestions on what the competitor does also risks hindering you from thinking innovatively - focus instead on what your users want!

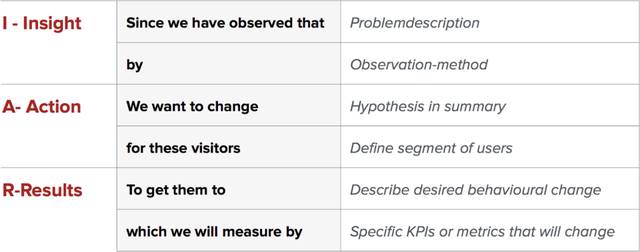

Okay, what do I mean by that, isn't it the same thing? No, this is how we think at Conversionista: an idea is a loosely formulated ambition or direction with many possible variations. A hypothesis is a structured idea that tells:

This makes a big difference for your experiments. A hypothesis as we define it requires that there is data supporting the hypothesis, it requires a precise description of what is to be tested, and it also requires a clear goal that makes it possible to measure the result of the test. We usually use a hypothesis formula to ensure we have a good hypothesis. It's called IAR and the abbreviation stands for Insight, Action, Result. It's a classic "fill in the blanks" sentence that helps you ensure you have a hypothesis worth testing!

What it really comes down to is understanding your users. Good hypotheses come from good insight work that combines quantitative and qualitative methods to build a good understanding of the user's problems and needs. Stop guessing, that was done in the 1900s!

It might seem obvious. Of course, I need to test where the user makes a decision. Or you might argue that the user always makes some decision? To influence the user, we need to know what information they need to make a decision. When a decision about, for example, a purchase is to be made - make sure all the information needed to make the decision is available there. So what I mean by testing where the user makes decisions is to test in places where the user absorbs information and makes a decision based on it.

Maybe you've run an A/B test on your homepage with a zero result? You're not alone. Just because you expose many people to your test doesn't mean you'll get a high impact rate. The user must be ready to absorb information and ready to make a final decision, which is much harder to achieve on the homepage than further down your funnel. So where might be a good place to test? Well, where you have a large drop-off, for example.

A/B testing is a really good method for definitively determining the effect of a certain change even when the effect is small but significant. However, a problem the method suffers from is that we always test a shift of an average. That is, our result shows what works for the average of all users. A test can very well have a negative impact on one group of users while having a positive effect on another group. The result shows if those who were positively affected generated more conversions than the loss for those who were negatively affected. This is both a strength and a weakness of the A/B testing method. It is also the source of a common cognitive trap regarding insights.

When you do research and find an interesting behavior, you must always quantify what you found before making a decision about a change. You can very well find several conflicting statements about users when, for example, doing usability tests. It's not strange - all users don't want the same thing. For that reason, you must always see your findings as a base to start from.

Found something in a usability test? Check how large a proportion it applies to with a quantitative tool. Found a drop-off? Do a qualitative test to find out why. Let no truths go unchallenged.

And remember: correlation is not the same as causation, but finding a correlation is an excellent basis for a hypothesis that you can test in an experiment.

Now that you've done everything else right, don't forget to check how many users you actually affect with your experiment and ultimately how much you can expect to get back from testing just there. You can very well come up with a really good insight when analyzing a flow that users go through, but it's still pointless to test in that flow simply because there are too few users to affect. A huge uplift of, for example, 20% helps little if you go from 100 to 120 purchases.

Here, statistical analysis also comes into play. A result is neither negative nor positive if it is not statistically significant. As a rule of thumb, you need thousands of users and hundreds of conversions to get a statistically significant result. Fortunately, this coincides quite well with the places where you can get impact. Moreover, there is a greater likelihood of statistically significant results when you have a larger effect!

There is an interesting law called Twyman's Law, which states: “Any figure that looks interesting or different is usually wrong”. What it means for A/B testing is that when you find something really interesting, your first instinct should be to try to find the error. An experiment we did showed a doubling of add-to-cart. When we looked closer, it turned out that the experiment had introduced an error where all add-to-cart sent double events, but only in the experiment variation, not in the original.

In another experiment I set up, we moved the price further down the funnel - in other words, the price was shown later in the customer journey. The result was that the click-through rate to checkout increased dramatically. It seemed very promising, but it turned out that the final conversion was not statistically significantly affected. The only thing we managed to do was move the point where the user made their decision (when they saw the price) to a later step, without getting them to convert at a higher rate.

You don't need to build a finished product - instead, test what would happen if the product existed. A buy button on a product that doesn't exist yet can, for example, lead the user to a message like "tell me when the product is in stock" and subsequent email collection.

Complicated experiments also risk leading to complicated analysis. Try to keep it simple, test one hypothesis at a time, and change only as much as needed to test the hypothesis. Rather do a series of experiments, build insights, and build on the results of previous experiments. Iterate, iterate, iterate!

Being curious, skeptical, and willing to constantly change your perception is at the core of data-driven work. One of the biggest obstacles to succeeding with that is the tendency to confirm the view of reality you already have. We are all affected and it is very natural. We can't possibly look at everything we see objectively and with fresh eyes. Pre-understanding is a prerequisite for seeing larger perspectives, how things are connected, and for making quick decisions. But it also risks leading to drawing the wrong conclusion or simply missing something new and interesting.

The basis for A/B testing is a scientific approach. You should try to disprove your hypothesis with everything you have. If you fail to do so, you can declare a new winner. Concretely, this means, for example, that you should never check against many different metrics to find one that supports your hypothesis. It's not just because of confirmation bias, it also introduces a serious statistical problem called the “multiple comparisons problem”.

How do you address this? Involve several people in experiment design and analysis, preferably people you usually disagree with. Be humble about your own ignorance. Avoid digging into data to find support for your hypothesis. Define in advance how the analysis should be conducted, what constitutes a successful result, and which related metrics should move and how.

Someone might think that we shouldn't take risks, think about what we could lose. I would like to turn that argument around. If we don't dare to experiment, the risk is almost total that we miss out on untapped potential!

The key to successful A/B testing is continuous improvement. Don't stop after one test, regardless of the result. Use the insights gained from each experiment to inform the next one. Build on what you've learned, refine your hypotheses, and keep testing. The more you iterate, the more you learn, and the better your results will be over time.

A/B testing should not be a solo endeavor. Involve your team in the process, from brainstorming hypotheses to analyzing results. Different perspectives can lead to more creative solutions and help identify potential pitfalls. Collaboration also ensures that everyone is on the same page and working towards the same goals.

Keep detailed records of your A/B testing process, including your hypotheses, test setups, results, and insights. This documentation will be invaluable for future reference and can help you avoid repeating mistakes. It also provides a clear record of your progress and can be used to demonstrate the value of A/B testing to stakeholders.

A/B testing is a long-term strategy, and it can take time to see significant results. Stay patient and persistent, and don't get discouraged by setbacks. Remember that even "failed" tests provide valuable insights that can guide your future efforts. Keep testing, keep learning, and keep improving.

Finally, don't forget to celebrate your successes. When you achieve a significant uplift or gain a valuable insight, take the time to acknowledge and celebrate it. Recognizing your achievements can boost morale and motivate your team to keep pushing forward.

By following these principles, you can set yourself up for success with A/B testing. Remember, it's all about understanding your users, testing hypotheses, and continuously iterating to improve your results.

Happy testing!