"Have you ever heard a heated discussion between two analysts about which statistical method is better: Frequentist or Bayesian? Or heard experts discuss confidence intervals, while you're just nodding along? Or perhaps you're, at this very moment, getting worried about ever finding yourself in the middle of such topics. How do these kinds of conversations matter for business decisions anyway?"

That is right..

As students from KTH Royal Institute of Technology, we worked at Conversionista to investigate exactly how the presentation format of A/B test results affects business decisions in the real world. So, to figure this out, we ran a study with 39 decision-makers in e-commerce, showing them test results in either a Bayesian or a Frequentist format. These two formats are based on two completely different schools of thought within statistics.

To make the difference clear, let’s look at a simple example: Imagine you’re trying to figure out if a coin is fair.

A Bayesian says: “I start with a hunch, flip the coin, and update my belief about how fair it is as I see more flips.

”A Frequentist? “I just look at the flips I have and ask: how surprising is this result if the coin is fair?”

Our mission was to find out if the statistical engine behind A/B testing tools really mattered for the people making the decisions. As it turns out… It doesn't matter!

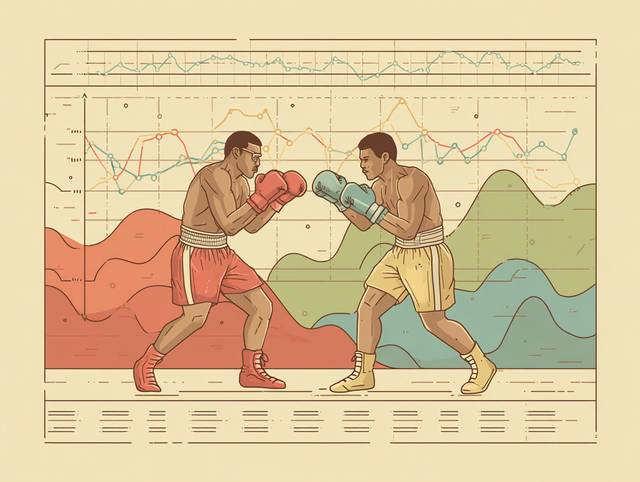

When we showed decision makers A/B test scenarios where the data was the same, just presented in different formats, we found that for:

Bayesian format

✔ Implement: 51.3%

✖ Don’t implement: 48.7%

Frequentist format

✔ Implement: 42.7%

✖ Don’t implement: 57.3%

Not exactly a knockout win for either side. And statistically speaking? No significant difference.

At first, this result was disappointing, but then we decided to dig deeper to find what actually mattered for people making business decisions. And it turns out there was only one thing:

The bigger the improvement, the more likely people said “Yes.”

Shocking, we know. But here’s the kicker: when we interviewed participants afterwards, most admitted they didn’t really know what “p-value” or “chance to beat” meant.

One participant literally said:

“I tried to Google what it meant and still didn’t understand.”

What metrics did they use to make their decisions instead?

In other words, the simplest, most intuitive numbers. Everything else was background noise.

Whether it’s a frequentist p-value or a Bayesian chance to beat, most decision-makers treated them the same way:

👉 Ignored them entirely.

This isn’t just a UX problem. It’s a cognitive one. When people don't understand confusing statistics, especially under pressure, they just make the call.

In fact, a trend in our data suggests that people with more statistical education are less likely to implement a change, potentially because they understand the risk better. But this was a trend, not a significant cause.

So ironically, the people who knew the least about the numbers made the boldest decisions.

It’s not your test.

It’s not whether you used a Bayesian or frequentist statistics engine.

It’s how the results are communicated.

Usually, business decision-makers aren’t statisticians. They don’t want to parse your posterior distribution or interpret what “statistically significant at p < .05” really means. To be frank, they don’t care. They want to know:

And if your A/B test report doesn’t make that clear? They’ll default to what feels safe. Or worse: guess.

After talking to some of the participants, we realised something crystal clear: What decision-makers want is a test result that’s as simple as these:

🙂 Go for it.

😐 Meh, not sure.

🙁 Nope, not worth it.

Forget statistical nuance, they’re looking for clarity, not complexity.

For analysts and experts

Whether you use Bayesian or frequentist tools, it doesn’t matter if your audience doesn’t understand either. Spend less time picking sides and more time thinking of how to communicate results effectively.

Focus on what decision-makers want to know. Prioritise clarity over statistical complexity, ensuring your insights are directly relevant to business goals.

Provide clear recommendations based on your data. Decision-makers want to know what actions to take, not just receive raw numbers. Ensure your reports lead with potential business outcomes.

For organisations and decision makers

Even just 10 minutes of training on what a p-value does and doesn’t mean can change how people treat test results. Better yet: give examples. Real ones.

Don’t expect every decision-maker to become a statistician. That’s what your analysts are for. Involve them not just in crunching numbers, but in storytelling, translating complexity into clarity. A good analyst doesn’t just hand over a dashboard, they walk you through what it means, what’s uncertain, and what’s actionable.

You can fight over which statistical engine is the best all day. But unless your stakeholders understand what those numbers mean for the business, it’s like arguing about which type of screwdriver is better while the furniture sits unassembled.

This study reminded us of a simple truth:

Data alone doesn’t drive decisions. Understanding the data does.

So, whether you're Bayesian, Frequentist, or just trying to survive another roadmap meeting, don’t forget who the numbers are for.

It’s not about being technically correct.

It’s about being understandably useful.

Ready to accelerate your digital growth? Add your details here or email us at hej[a]conversionista.se and we’ll get back to you.