So, you've just completed a successful A/B test where the variant had a significantly better conversion rate than the default. Now, the management wants to know how much extra money the company is going to make in a year if the variant is implemented.

What’s your reply?

It's tempting to calculate the extra revenue generated by the users in the variant and just multiply it by 12, assuming you’ve run your experiment for a month. In fact, several sites devoted to A/B-testing and CRO will tell you to do something along these lines.

But by doing so, you're ignoring several important factors of uncertainty in the situation.

My colleagues and I at Conversionista! know that working experiment-driven is a great way to boost your results. By showcasing the numbers on uplift and revenue, you can illustrate just how important it is to embrace experimentation and level up your tests throughout the organization. However, we also know it’s crucial to be cautious when projecting future results based on experiment data.

Let's dive into an example to uncover the caveats!

Assume you have a business selling one type of wine. You’re thinking about changing the label on the bottles, and run an A/B test to see if this will influence the conversion rate.

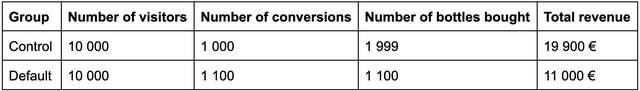

You display the old bottle to 10 000 visitors, of which 1 000 decide to buy, giving you a conversion rate of 10%.

The new label bottle is also displayed to 10 000 visitors, of which 1 100 decide to buy, giving you a conversion rate of 11%.

A common approach to testing the difference between the groups is a statistical z-test for proportions. Such a test would tell you that there is a significant difference between the variants, indicating that the new label is better.

Now, you want to calculate how much extra revenue the label change will generate.

In the control group, 999 of the visitors bought one bottle of wine at the price of 10 €, but one of the customers decided to buy 1 000 bottles. This leads to 19 900 € in total revenue, an average order value of 19,99 € and an average revenue per visitor of 1,99 €.

In the variant group, all the customers bought only one bottle of wine each, generating 11 000 € in total revenue, an AOV of 10 € and an average revenue per visitor of 1,1 €.

So, the revenue in your variant is actually lower – even though the conversion rate is higher!

While this may seem as a simplified example, it puts the spotlight on some of the main issues:

Apart from what’s mentioned above, there are several other factors of uncertainty as well. For example, the number of conversions will of course depend on the traffic to your page. This is yet another random factor that has not been accounted for. External factors like seasonality, economy, competition and so on may influence both the conversion rate and order values in the future. Such effects can be both negative and positive.

Furthermore, the effect of the variant you implement may wean off over time or even be limited to a specific time period. It should also be noted that, when dealing with noisy data like revenue and doing experiments with low power, your estimates may be subject to what’s called Type M error. That is, you’ve overestimated the effect.

Last but not least, the standard methods used to analyze experiments are statistical hypothesis tests, not forecasting models. Once you start predicting future effects, you are taking a leap into uncharted territory.

That being said, it’s not unreasonable to assume that a variant which increases the conversion rate will have a positive effect on revenue. But to put an exact measure on it, and to predict future revenue, is evidently a lot more complicated.

It’s not necessarily wrong to make some naive calculations – like multiplying the revenue effect by 12 – and presenting it. If you find that this is the most straightforward and easy way to do it in your case, by all means, do it.

However, I believe it is imperative to be very clear and transparent about which assumptions you’ve made, and the uncertainty in your measures. Here are some suggestions:

We know it can be a bit tricky sometimes, but remember – we’ve got your back! Swing by our local office for a coffee, give us a call, or send an email to hej@conversionista.se and we'll happily deep dive into this together.

Can you really tie A/B testing to revenue? – Collin Tate Crowell, Kameleoon.com

Bayesian A/B Testing at VWO (includes methods for modeling revenue) – Chris Stucchio, VWO

Beyond Power Calculations: Assessing Type S (Sign) and Type M (Magnitude) Errors – Andrew Gelman and John Carlin

An Introduction to Type S and M errors in Hypothesis Testing – Andrew Timm

Overcoming the winner’s curse: Leveraging Bayesian inference to improve estimates of the impact of features launched via A/B tests – Ryan Kessler, Amazon

Ready to accelerate your digital growth? Add your details here or email us at hej[a]conversionista.se and we’ll get back to you.