Why not start by defining what an experiment program is?! We usually say that anyone can do an A/B test, but very few organizations are good at working experimentally across the entire organization and consistently delivering insights over time.

You need a clear process and methodology. You need buy-in from management. You need tools and IT resources. You need competent colleagues who can design robust experiments and then analyze the outcomes. And this is exactly where things can go wrong in the first way:

All those things we mentioned seem like too much. - "This is going to be expensive and difficult. Where should we start? Maybe it's best to wait…."

And so you don't get started. And all the positive effects of working experimentally are missed. Then it might sound something like this:

"It's not for us. It's too expensive. We have other priorities."

It's NEVER wrong to start with a few simple A/B tests. Test your wings. Make a few mistakes. Get a few quick wins. Spark some curiosity and enthusiasm. Get a bit more budget. And then you're off. Congratulations!

I say it's never wrong to work this way. It really isn't. But it can still go wrong. Because now we come to problem number 2.

We are all beginners at the start. Therefore, you will make most of the classic beginner mistakes. That's okay. **What is not okay is not learning from them and moving from the crawling to the walking stage.**

In the beginning, it's easy to test. Okay – there are a lot of technical and analytical things you need to learn, but the good news is that most people have quite a few low-hanging fruits to pick at the start. And when you've picked them and see the results, you become the hero at work. Wohoo!!

Eventually, those fruits run out, and now it becomes harder to show results of 10, 20, or maybe 30 percent improvement. Now you're down to 1-2-3 percent, and it's not as fun anymore.

You've been engaging in what we call shotgun testing. You spray test ideas a bit wildly, and several of them seem to work well enough. So why do it any other way?

Another popular name for this phase:

"Throw something on the wall and see if it sticks."

You've probably also made some analytical mistakes and implemented what you thought were winners - but were actually losers. And now your colleagues come back to you and wonder where the promised result improvements went?! You don't know what to say. The honeymoon is over.

Here it's easy to give up and conclude that:

"A/B testing is not for us. It probably works for others, but we don't think it's the right thing for us because our business is special." Something like that.

Here comes a moment of truth. Despite declining results, you must convince those holding the purse strings that now is the time to invest more. Not less. Not all experiment teams survive here.

Time to solve problem number 3.

What you've done (likely without thinking about it) is that you've only worked on one end of the prioritization scale.

The simplest type of prioritization model, which we all use every day, is effort versus reward, cost vs. benefit. What do I have to do, and what do I get back?

When we focus on picking all the low-hanging fruits, we implicitly choose to only do the things that are easy*– low cost, low effort, high ease. On the other end of the scale, which is "what do I get back" or "impact," you may not have worked at all.

What then happens is that when you've picked your low-hanging fruits, the impact decreases. In the beginning, you were lucky and got impact here and there with your "shotgun tests." You did quite a few tests, so sure, some, or maybe several, delivered good results. But now that your "ease" is running out, you don't know what to look for - or where.

Now it becomes serious experimentation. So far, you've managed quite well without specific knowledge in optimization. Mostly, it's been about knowing the tools, coding the test scripts correctly, etc. But now you must seriously learn the difficult art of not only testing the right way,*but above all, testing the right things. Or as one of the real heavyweights in the field, Peep Laja at CXL, says:

"The most difficult part: The discovery of what really matters."

To reach this next critical step, you must start diving deep into:

- Understanding your customers' behavior (web psychology)

- How to discover behaviors (with quantitative data and qualitative user studies)

- How to formulate robust hypotheses, and last but not least

- Learning a structured prioritization method.

You learn web psychology and data analysis best on one of the blogs on the subject, e.g., at >CXL, or by following our own blog. If you want to quickly get to a professional level, I recommend our own training, Conversion Manager, which we run every semester.

To learn how to formulate hypotheses, I suggest you use our hypothesis formula.

I want to end by highlighting a prioritization method because we have come to the last and fourth point in what can go wrong in your experiment program.

Let's say you've now managed to figure out how to find tests that deliver high impact. You're even quite proud to announce 5, 10, or 15 percent improvement in your tests. Yet few or no one cares. Your colleagues have a hard time seeing the business result of the changes your tests suggest.

Then it's time to take the next step with your prioritization formula.

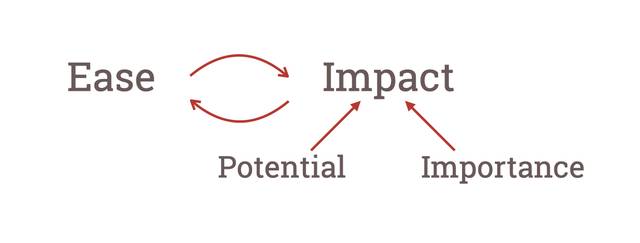

So far, we've looked at Ease and Impact. The problem here is that you can have a great Impact in a place that doesn't matter for your business. You have 30% more using your search function, but no more purchases. 20% more using your price calculator, but it doesn't boost your business.

To get past the problem, we suggest you use the PIE model developed by our Canadian partner, WiderFunnel.

Now you divide Impact into Potential and Importance. Potential is what local improvement you can achieve (5-10-15%) and Importance is how important the change is for you in relation to your business goals.

An important effect that occurs when you start working this way is that you now have to start talking to your colleagues to really understand what are indeed your most important goals (so you can focus on just these).

When you are close to your most important and prioritized projects, the chance that what you do is noticed as an important part of the company's growth strategy also increases.

What you absolutely DO NOT want is for your BIG AND IMPORTANT projects not to be driven by an experimental and data-driven strategy and instead be driven by "good old-fashioned gut feeling." And that what you do is relegated to an experiment workshop in the basement where you get to tinker with the current site while the innovation team takes care of and steers your growth projects.

I hope that something we've highlighted can help you in your work going forward - if you now feel that you've gotten stuck in any of the pitfalls we've discussed here.